You want to offer your staff the best corporate training, but how do you measure the effectiveness of a learning course?

Training evaluation models help you ensure the quality of your training is high, keeping employees well-trained and employee retention and revenues high as you evaluate training programs for ROI, quality, and relevance to your employees.

Utilizing evaluation models specific to training can effectively address these challenges, contributing to the enhanced success of organizational training programs.

This article explores data-driven methodologies and emerging trends that will influence the training evaluation landscape in the forthcoming year. It delves into effective models set to shape this sphere, offering a detailed examination of tools capable of optimizing training outcomes for enduring success.

Table of ContentsTraining evaluation models are systematic frameworks to assess organizational training programs’ effectiveness, efficiency, and outcomes of your employee training methods.

These structured approaches measure learning impact, alignment with goals, and guide data-driven decision-making.

With levels ranging from participant reactions to business impact, these models facilitate continuous improvement and showcase learning initiative value to stakeholders when you include them in your employee training plan.

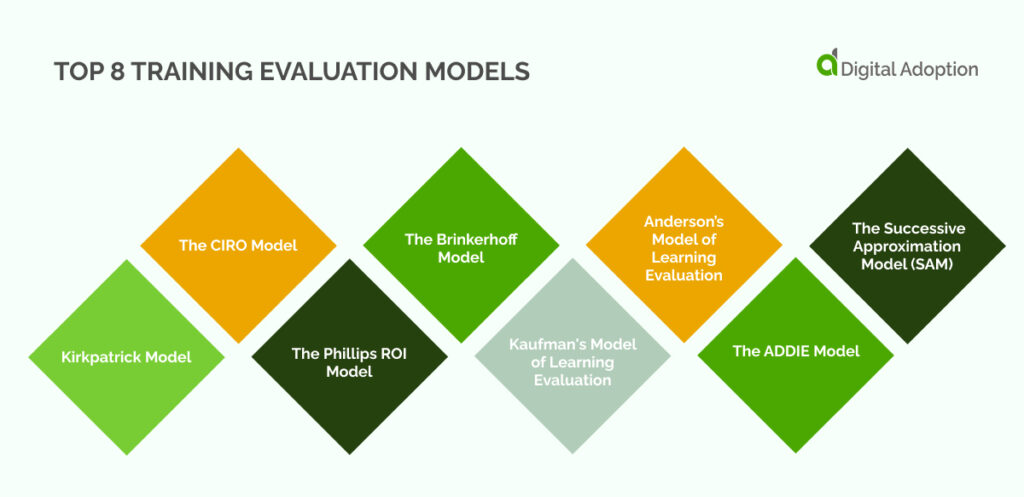

Explore the intricacies of effective training assessment with an in-depth examination of the top 6 training evaluation models and their practical applications.

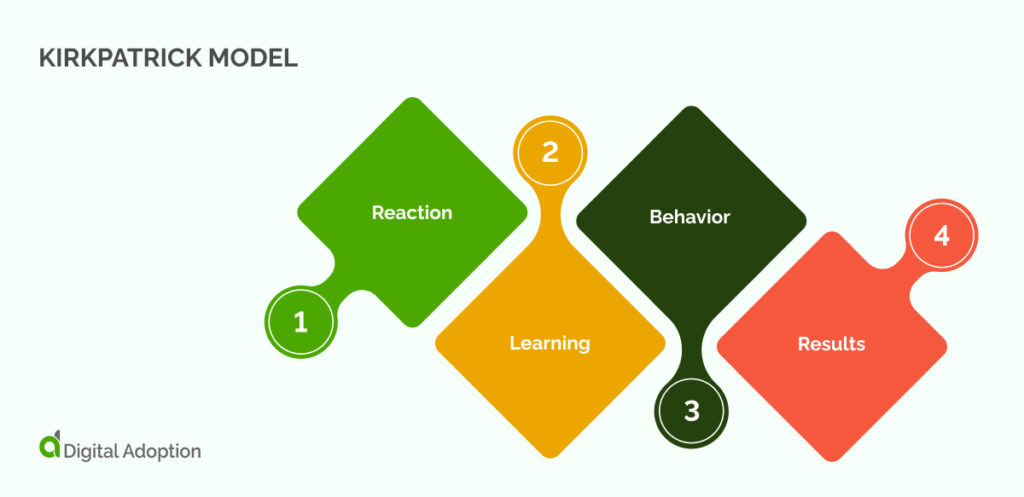

The number one spot for training evaluation models is the Kirkpatrick Model, which so many organizations use for its ease of use and effectiveness.

The Kirkpatrick Model, a renowned L&D evaluation framework, analyzes the efficacy and outcomes of employee training programs. It is also the core and inspiration for many other evaluation models.

Considering both informal and formal training styles, the model assesses against four criteria levels:

The Kirkpatrick Model is suited to learning and development professionals as it supports a range of applications in enterprise training environments.

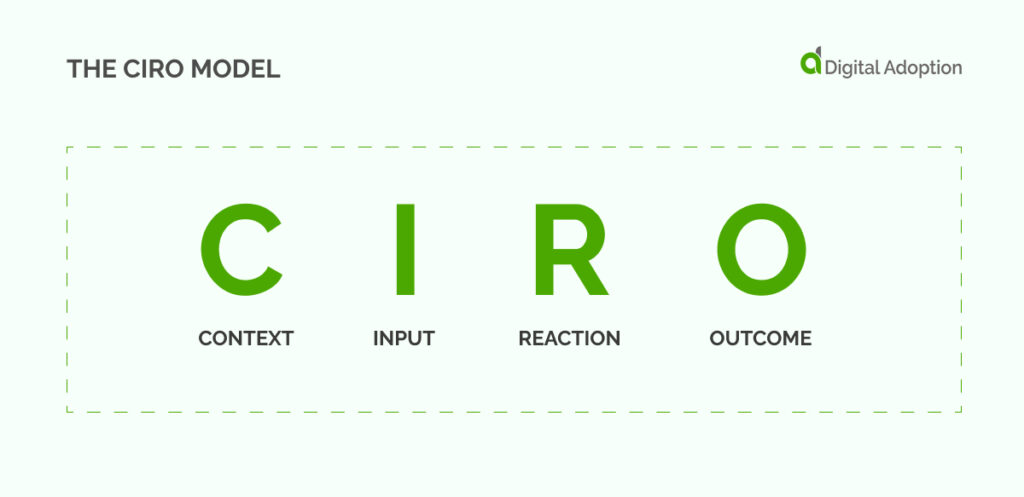

Second place goes to the commonly used and popular CIRO Model.

The all-encompassing CIRO (Context, Input, Reaction, Outcome) training evaluation method developed by Peter Warr, Michael Bird, and Neil Rackham provides a complete approach to evaluating the efficacy of training initiatives.

Much like the Kirkpatrick Model, the CIRO model assesses against four criteria levels but does so in a more holistic way:

This model is most suitable for training management programs. It doesn’t focus on behavior and may not be suitable for individuals at lower organizational levels. Contextually, it revolves around identifying and assessing training needs.

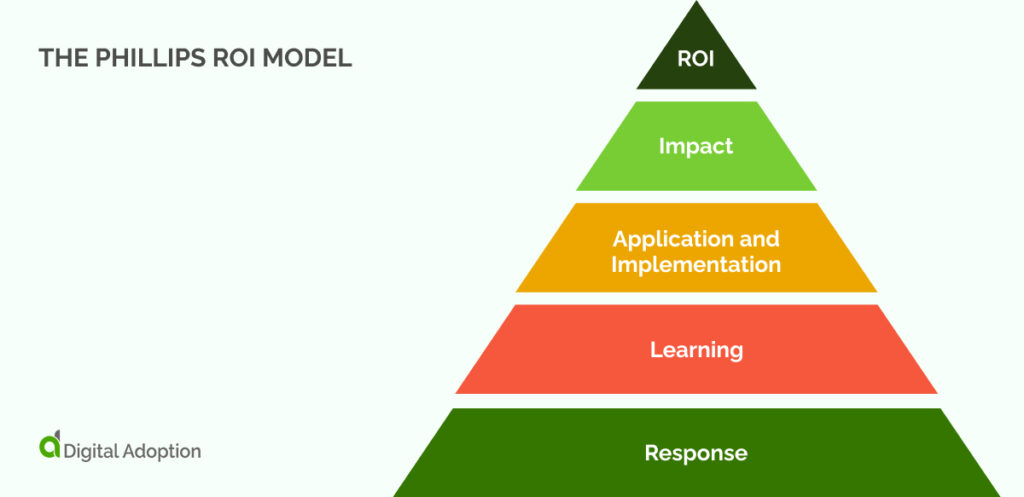

The third model in the list is the Phillips ROI model.

The Phillips ROI Model is an approach that links training program costs to tangible outcomes to ensure organizations get a return on their investment.

Expanding upon the Kirkpatrick Model, Phillips ROI categorizes data from various employee training programs to assess five areas of training quality.

This model is best for teams, including learning and development professionals and CFOs (chief financial officers), to ensure ROI on learning investments.

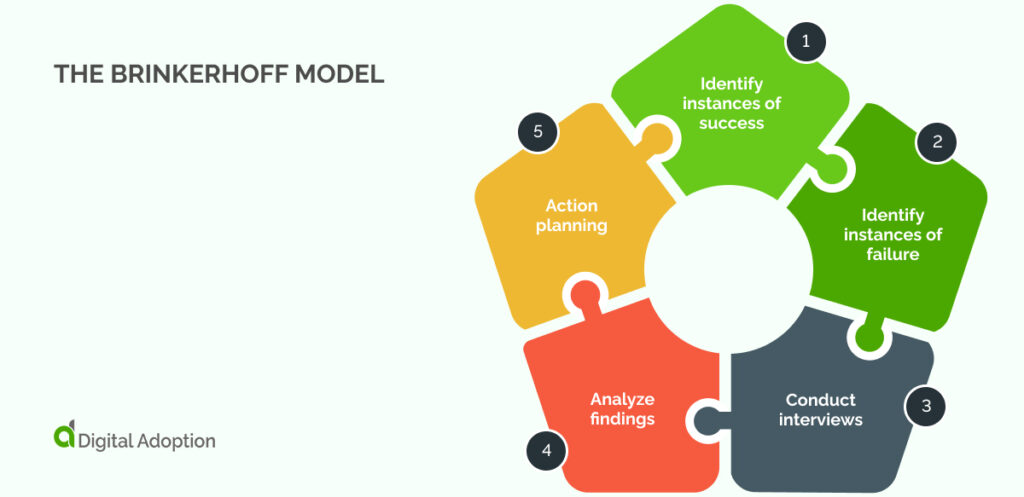

The Brinkerhoff Model is in fourth place as it sees everyday use, although it has slightly more specialized use cases than the above models.

The success case method, crafted by Robert O. Brinkerhoff, is a thorough training evaluation model. Its aim is to pinpoint and scrutinize the effects of training initiatives by concentrating on remarkable success or failure.

This model has five steps, beginning with identifying the instances of success.

This model is best for larger enterprises and has been successful for NGOs and government departments. The Brinkerhoff Evaluation Institute offers courses for those who want to implement this model.

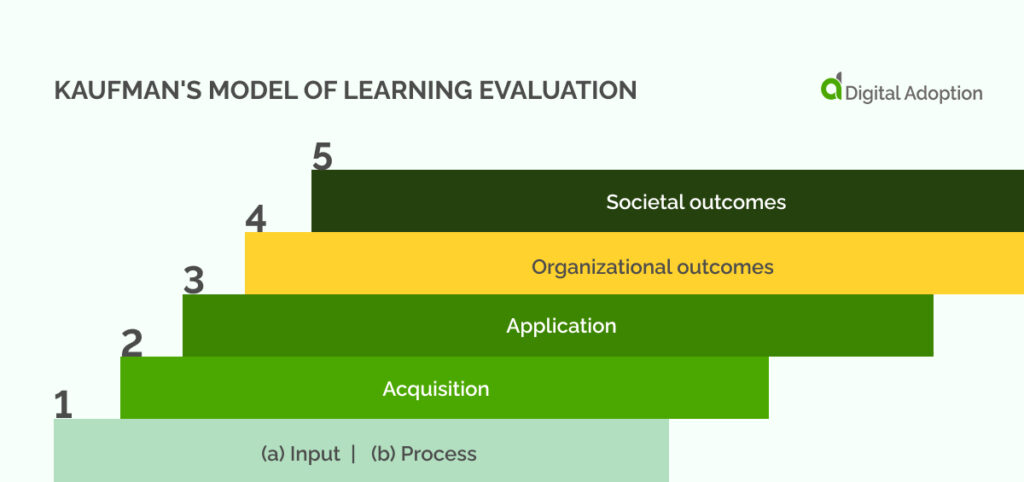

Kaufman’s Model of Learning Evaluation is in fifth place but remains effective.

Kaufman’s model, rooted in the Kirkpatrick Model, serves as a response and enhancement to Kirkpatrick’s approach. It seeks to elevate and refine the evaluation process.

Kaufman’s model introduces five levels of training assessment.

Kaufman’s model is another model built on the foundation of the Kirkpatrick Model. It is a response or reaction to Kirkpatrick’s model that aims to improve upon it in various ways. Kaufman’s six levels of training evaluation include:

(b) Process: Assess the acceptability and efficiency of the training procedures.

Kaufman’s model is effective for any learning and development team, especially teams focused on allocating resources for learning.

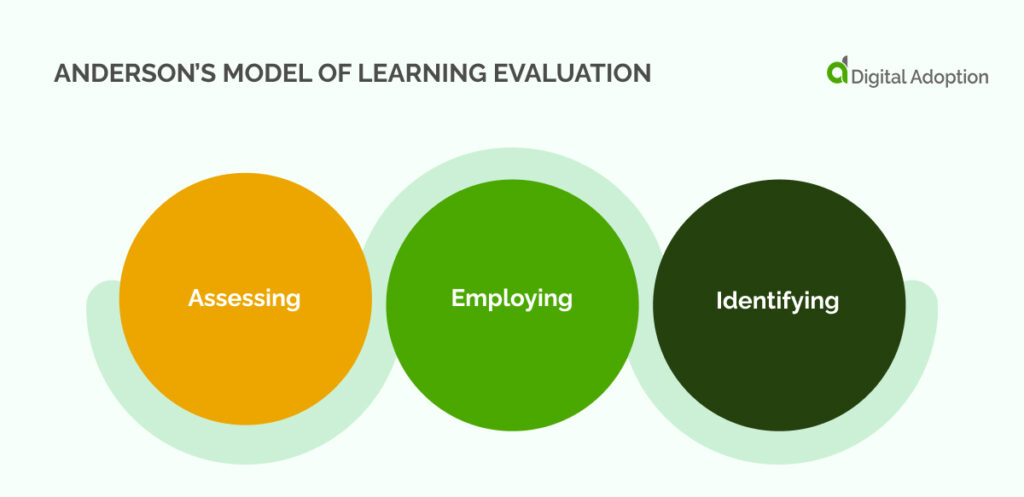

Anderson’s Model of Learning Evaluation streamlines training assessment through a three-stage organizational-level cycle, emphasizing strategic alignment for efficient evaluation by learning and development professionals.

Anderson’s Model of Learning Evaluation is a strategic and streamlined three-stage organizational-level cycle for efficient training assessment in learning and development.

The Anderson learning evaluation model encompasses a three-stage evaluation cycle implemented at the organizational level to gauge training effectiveness.

This model places a greater emphasis on aligning training goals with the organization’s strategic objectives.

The three phases of Anderson’s Model involve:

Anderson’s Model works well for L&D professionals who want a simplified evaluation tool they can implement quickly because of its small number of steps.

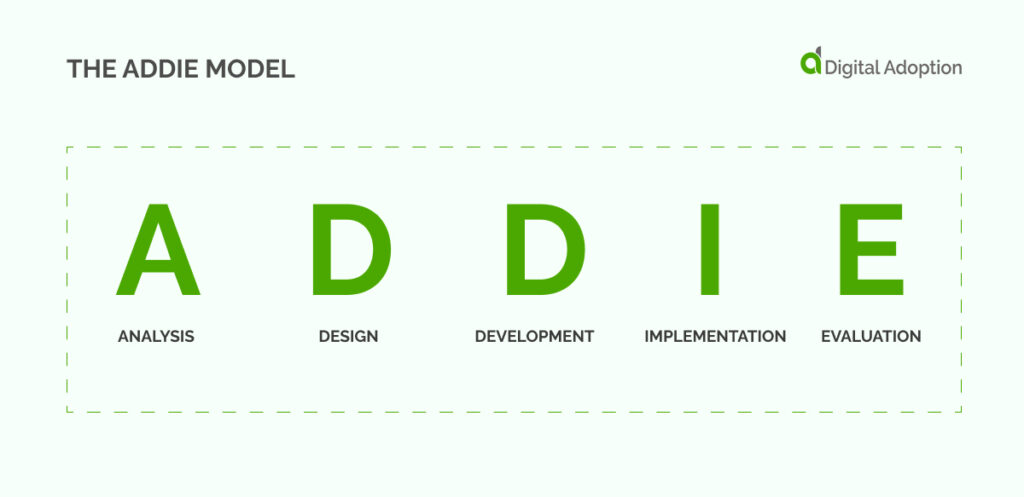

The ADDIE (Analysis, Design, Development, Implementation, Evaluation) Model is a systematic instructional design framework that guides the development of effective training programs.

It involves a five-step process, starting with analyzing training needs, followed by designing the program, developing materials, implementing the training, and concluding with a comprehensive evaluation to measure its effectiveness.

Ideal for instructional designers and training developers, providing a structured approach to create, deliver, and assess training programs.

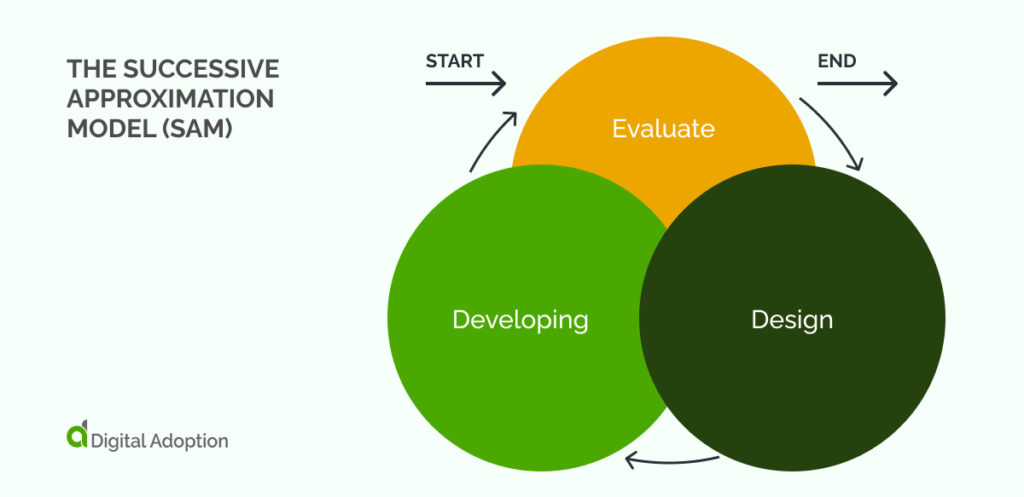

The Successive Approximation Model (SAM) is an agile instructional design approach emphasizing iterative development and continuous feedback for efficient and adaptable training creation.

The Successive Approximation Model (SAM) is an agile instructional design model that emphasizes iterative development and continuous feedback throughout the training creation process.

SAM involves a series of rapid iterations, focusing on collaboration and frequent evaluations with stakeholders. It starts with an initial prototype, followed by successive refinements based on feedback until the final product is achieved.

The Successive Approximation Model is suited to organizations and teams looking for a flexible and adaptive approach to instructional design, especially in dynamic and developing learning environments.

Executing training evaluation models necessitates meticulous planning and execution to ensure precise and meaningful outcomes. Consider the following best practices:

Ensure that the evaluation mirrors the specific goals and objectives of the training program. Each evaluation level should resonate with the intended outcomes, from knowledge acquisition to behavioral change and organizational impact.

Engage key stakeholders, including trainers, participants, and organizational leaders, in the evaluation process design. Their input ensures the model captures relevant aspects of the training and addresses their expectations.

Design data collection methods that are reliable and suitable for each evaluation level. This practice includes pre- and post-training surveys, assessments, interviews, observations, and performance metrics. Ensuring data accuracy and consistency is crucial for meaningful analysis.

Before implementing the training, establish baseline measures to assess the initial state of participants’ knowledge, skills, and behaviors. This baseline serves as a reference point for evaluating the impact of the training and identifying improvements.

Foster a safe and non-judgmental environment that encourages participants to provide candid feedback about the training. Anonymous surveys or focus groups enable participants to share their thoughts openly, providing valuable insights for improvement.

Share the evaluation results with stakeholders clearly and transparently. Highlight successes and areas for improvement, demonstrating how the data aligns with the training objectives. Effective communication builds trust and underscores the value of evaluation efforts.

Treat the learning model as a way of ongoing improvement for your learning programs.

Regularly review evaluation methods for each learning tool, incorporate feedback, and refine the process to enhance its effectiveness over time.

Selecting an effective learning model is crucial for maximizing staff development. Still, it is only valid when you deploy the right tool that suits your organization’s staff needs.

This factor is crucial to success, so prioritize models aligned with your organization’s goals, training objectives, and employee needs and use an employee training template where necessary. Consider user-friendly interfaces, customizable features, and comprehensive analytics to ensure accurate assessments when you collect employee feedback on training.

Selecting an appropriate training evaluation model facilitates precise progress measurement, targeted identification of areas for improvement, and fundamental enhancement of skills and performance in your staff.

Independent research is imperative, given the diversity of training evaluation models, ensuring ongoing alignment with the developing needs of your staff throughout each business year.